Tech

Do you frequently use ChatGPT? A study says that you’re lonely.

Imagine communicating your awful day with a chatbot, only to feel even more isolated as a result. While most individuals use ChatGPT for practical chores, a tiny minority of heavy users may be trading in instant comfort for a developing sense of loneliness or even emotional reliance, according to new research from OpenAI and the Massachusetts Institute of Technology (MIT).

First study: Behavioural analysis

The results are based on two investigations. The first was an observational examination of around 40 million ChatGPT encounters, in which researchers looked for indications of emotional engagement in talks using automated algorithms. Through the evaluation of more than 4 million chats and the use of focused user surveys, the team was able to establish a relationship between the kinds of interactions users had with the chatbot and their self-reported attitudes.

This extensive study offered a comprehensive understanding of ChatGPT usage and demonstrated that affective, or emotionally expressive, interactions are generally somewhat uncommon. However, a tiny fraction of frequent users—especially those using ChatGPT’s Advanced Voice Mode—showed notable emotional connection, with some even calling the AI a friend.

Second study: Controlled trial

The second study was a four-week controlled trial with about 1,000 individuals. The purpose of this Institutional Review Board-approved study was to ascertain the potential effects of ChatGPT’s various features, such as text versus voice conversations, on users’ wellbeing. In order to investigate the effects on social relationships, loneliness, emotional reliance, and potentially problematic technology use, participants were randomly allocated to different model configurations.

The study found that while short-term voice mode use was associated with better emotional health, regular use over an extended period of time may have the reverse effect. Notably, the study discovered that, in contrast to more neutral, task-focused interactions, personal conversations—those in which users and the AI engaged in more emotionally charged dialogue—were linked to higher degrees of loneliness.

Emotional dependence on the chatbot

Why the inconsistent outcomes? Well, that depends on you. Non-personal discussions may unintentionally increase emotional reliance on the chatbot, especially among frequent users, according to the research. Conversely, people who were already inclined to be attached in relationships and thought of the AI as a friend were more likely to suffer from the consequences. This implies that individual characteristics, such emotional needs and initial loneliness, can have a big impact on how AI interactions impact wellbeing.

The majority of users are notably safe. ChatGPT is typically asked for spreadsheet assistance rather than introspection. Nonetheless, the research identifies a specific demographic: frequent voice users who depend on the bot for emotional support. Imagine voice conversations late at night about existential dread or excessive disclosure of personal drama. Even though they were uncommon, these individuals displayed more severe symptoms of reliance and loneliness.

An automated classifier set named ‘EmoClassifiersV1’ was created by the researchers in order to evaluate these patterns. Using a vast language model, these tools were created to identify particular emotive indicators in discussions. Sub-classifiers that concentrated on more subtle facets of both user and AI messages and top-level detectors that recognized broad themes like loneliness and problematic use comprised the two tiers of classifiers. By using a dual strategy, the team was able to evaluate millions of interactions quickly while protecting user privacy and picking up on minor behavioral cues.

The takeaway

The study thus calls into question the appropriate behavior of “human-like” AI. The speech mode of ChatGPT, which aims to be captivating, treads carefully. A 10-minute conversation could benefit from energetic banter, while lengthy conversations could backfire. According to the study, the chatbot is attempting to mix helpfulness with prods toward healthier usage by utilizing these information to improve its models.

But “AI bad” isn’t the only lesson to be learned here. Most people still view ChatGPT as a tool rather than a therapist. The actual lesson? Setting limits is important. It might be time to call a human buddy if your nighttime ritual consists of spending hours discussing philosophy with a chatbot. AI isn’t meant to replace relationships, as the study drearily points out, but if you do, don’t expect a happy conclusion.

The paper emphasizes that this is preliminary research and not a conclusion. To clarify how AI interactions influence—or distort—our social life, the teams intend to do additional research. For the time being, their suggestion is straightforward: do not use ChatGPT as a diary, but rather as a Swiss Army knife. What if you find yourself referring to it as “mate”? Perhaps log out and call a real person.

Tech

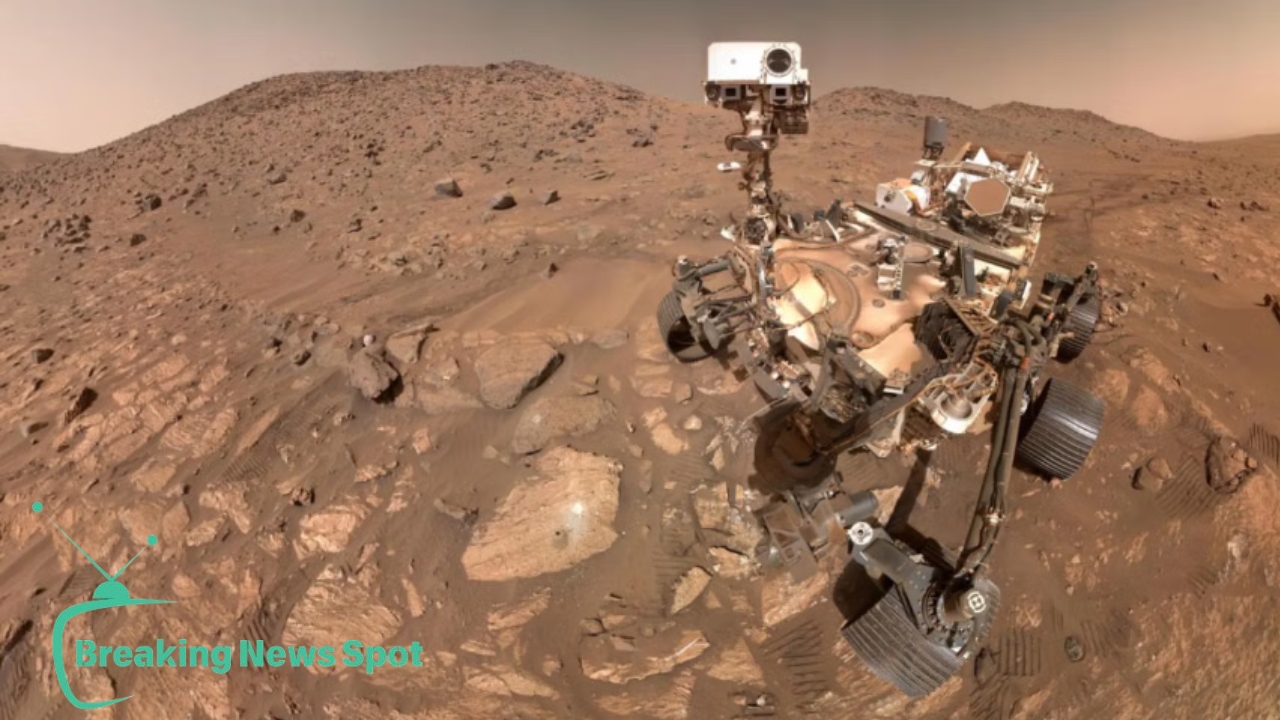

Possible signs of ancient life on Mars are discovered by a NASA rover.

The Perseverance of NASA According to study that was published on September 10 in the scientific journal Nature, the Mars rover may have found signs of ancient microbial life on Mars.

NASA said the results are based on a rock sample that was taken in Jezero Crater, an old dry riverbed that used to supply water to the planet’s basin. ‘Cheyava Falls’ is the name of the rock that was sampled in 2024 when the rover studied a feature known as Bright Angel.

According to NASA, the sample, now known as “Sapphire Canyon,” included mineral patterns and chemical compounds that scientists believe could be potential biosignatures—features that may be connected to biological activity but need more research before any inferences about life can be made.

Within the sedimentary rocks, Perseverance’s sensors found organic carbon, sulfur, phosphorus, and oxidized iron—elements that potentially sustain microbial metabolisms on Earth.

Additionally, they discovered iron-rich minerals such as greigite (iron sulfide) and vivianite (hydrated iron phosphate), which are known to occur in conjunction with microbial activity or decomposing organic debris. However, the data is equivocal since these mineral fingerprints may also be created by non-biological events.

The finding, which contradicted previous theories that only older Martian formations may retain traces of life, was surprising since it originated from relatively fresh sedimentary rocks, according to experts. If verified, the finding would indicate that Mars was habitable later in its history than previously thought.

Since it began working at Jezero Crater in 2021, Perseverance has gathered 27 rock cores for analysis. In addition to continuing its research, NASA intends to utilize the rover to test materials for possible spacesuits and monitor environmental conditions in order to get ready for future human flights.

Tech

Gen Z selected Nepal’s new prime minister over Discord before the announcement was made.

Discord is a social networking service more commonly associated with online gaming, but last week hundreds of teenagers used it to support Nepal’s future leader, giving the nation’s political dilemma a new dimension.

Following weeks of turmoil, their candidate, former Nepalese Chief Justice Sushila Karki, was sworn in as Nepal’s first female prime minister on September 12 and led an interim administration.

After officials in the Himalayan state tried to limit social media use, Generation Z (those born approximately between 1997 and 2012) took the lead in the demonstrations, which were mostly against corruption.

Using internet platforms, protesters—many of whom were in their teens and twenties—organized marches, disseminated information, and ultimately cast ballots for possible candidates. As reported by India Today recently, the most well-known center was a Discord server dubbed ‘Youth Against Corruption’, which at its height had over 130,000 members.

In the last round on September 10, more than 7,713 ballots were cast, and Karki received a majority of more than 50% of the vote, according to a recent article from the South China Morning Post. Prior to her formal appointment on September 12, Karki met with General Ashok Raj Sigdel, the leader of Nepal’s army, and President Ram Chandra Poudel.

It’s unclear, though, if the Discord vote had a direct impact on Sushila Karki’s official appointment as Nepal’s prime minister.

Discord was first created in 2015 by Jason Citron and Stanislav Vishnevskiy as a way for gamers to interact while playing. Since then, a younger audience has been drawn to its straightforward interface and combination of text, audio, and video features, particularly during the pandemic when usage grew well beyond the realm of games.

Hundreds of thousands of people can join Discord servers, forming large online communities with news, debate, and coordination channels.

The platform turned as a vital organizing tool for the protests in Nepal. In addition to the leadership vote, volunteers established channels for fact-checking, emergency support, and logistics within the Youth Against Corruption site. With the use of this system, protesters were able to control information flows in real time while staying away from major social media sites that were prohibited, like Facebook, Instagram, X, LinkedIn, and others.

Tech

Bangladesh will be represented at Formula SAE Japan 2025 by the RUET team.

At one of the most prominent engineering design contests in the world, Formula SAE Japan 2025, where students construct and compete in formula-style electric vehicles, Team Crack Platoon from Rajshahi University of Engineering and Technology (RUET) is representing Bangladesh.

At Aichi Sky Expo, Formula SAE Japan 2025 got underway on September 8 and will run through September 13. Ninety teams were chosen this year from a large number of international applications; the only Bangladeshi team to make it was Team Crack Platoon from RUET. Since no other South Asian team has made it to the competition this year, they are also representing the area.

The Society of Automotive Engineers (SAE) organizes the competition every year, which is widely regarded as the “Olympics of Engineering Students” and challenges competitors to design, build, and test automobiles that adhere to exacting international standards. Teams are graded on many things, such as their design, cost analysis, business presentation, and how well they stay on track with their performance.

Team Crack Platoon will be racing with CP-Astrion, their third-generation electric race car. It has a lightweight composite frame, wings that are better at reducing drag, and a battery system backed by Tesla. As the team says, this car was built to meet the world safety and performance norms of Formula SAE.

“We’re excited to learn from the best and show that Bangladeshi students can compete on this global stage,” team captain Saadman Saqueeb stated in reference to the tournament. The tale of CP-Astrion, which was created with little funding but a great deal of enthusiasm and collaboration, is what sets us apart.

The group believes that by sharing their experience, other students will be inspired to investigate innovation. “We want our journey to demonstrate that Bangladesh can produce world-class engineering,” Saqueeb continued. We believe that if we can do it, anybody can, and we hope that this encourages creativity and experiential learning throughout South Asia.

There are 28 people in the entire Crack Platoon team, and they work in several departments like design, business operations, electrical, and mechanical. Team Captain Saadman Saqueeb, Electrical Head Sudipto Mondal, Mechanical Head Md Habibullah, Design Head Md Nayem, and Business Head Rafiul Haque Ayon are in charge of the sectors.

-

Sports6 months ago

Sports6 months agoMessi comes back and scores in less than two minutes.

-

Sports7 months ago

Sports7 months agoThey will make IPL a hit

-

Entertainment7 months ago

Entertainment7 months agoDue to his mental health issues, David Kushner has cancelled his tour.

-

Entertainment7 months ago

Entertainment7 months agoWhy did Juhi reject Salman?

-

World7 months ago

World7 months agoIsrael continues its Gaza attack, killing a journalist and issuing evacuation orders.

-

Fashion7 months ago

Fashion7 months agoBefore getting your ears pierced, here are some things to consider

-

Tech7 months ago

Tech7 months agoXiaomi brings Redmi Note 14

-

Fashion7 months ago

Fashion7 months agoWhy you should add deshi products to your Eid shopping list